A Professional Instrument Company Combining Excellence and Showcasing Its Strength: A Technical Architecture Case Study

Design Philosophy and Challenges:

In the era where technological advancements are propelling industries forward at unprecedented speeds, a professional instrument company must be equipped with a robust and scalable architecture to not only meet the current needs but also to lead in the next frontier. Our company, with its emphasis on excellence and innovation, has designed a technical architecture that encapsulates both these principles. The traditional challenges of handling high-volume data and ensuring real-time analytics while maintaining system reliability have been addressed, setting a new standard.

The primary goal of our architecture is to support the expansive portfolio of instruments our company produces, from testing solutions to monitoring systems. To achieve this, we embarked on a journey to understand the cutting-edge technologies available in the market and combined them with our in-house expertise. This hybrid approach ensures that we are not only using the latest tools but also adapting them to fit our specific needs and industry demands.

Component Selection and Justification:

At the core of our architecture is Apache Kafka for high-throughput data streaming. As data from our instruments needs to be processed and analyzed in real-time, Kafka’s ability to handle massive data volumes and offer high fault tolerance makes it a critical component. Kafka complementing Apache Spark, a powerful tool for distributed processing of large data sets, ensures that we can perform complex data analysis without compromising on performance.

For managing and scaling our database operations, Amazon DynamoDB was selected. Unlike traditional relational databases, DynamoDB’s NoSQL capabilities allow us to efficiently store and retrieve our instrument data, with automatic scaling and low-latency reads and writes. This flexibility is crucial as our data needs to adapt to the ever-changing landscape of our industry.

In terms of machine learning, TensorFlow has been chosen for its widespread adoption and extensive support for various machine learning tasks. Our prediction models require high accuracy and low latency, making TensorFlow a perfect fit for training and deploying models in real-time.

Finally, to ensure the security and integrity of our data, we have integrated IAM (Identity and Access Management) services from AWS. IAM provides granular control over who can perform what actions on our resources, ensuring that only authorized personnel have access to sensitive data.

Deployment Strategy:

Our deployment strategy is designed to be both flexible and scalable. We utilize Docker containers for deploying our application components, ensuring consistency across different environments. Docker’s lightweight nature ensures that we can spin up new instances quickly, which is essential for our rapidly evolving market demands.

Kubernetes, a highly reliable container orchestration tool, is employed to manage our containerized services. Kubernetes allows us to deploy, manage, and scale our application with ease, automating many of the tedious tasks involved in running a distributed system.

Architectural Case Study:

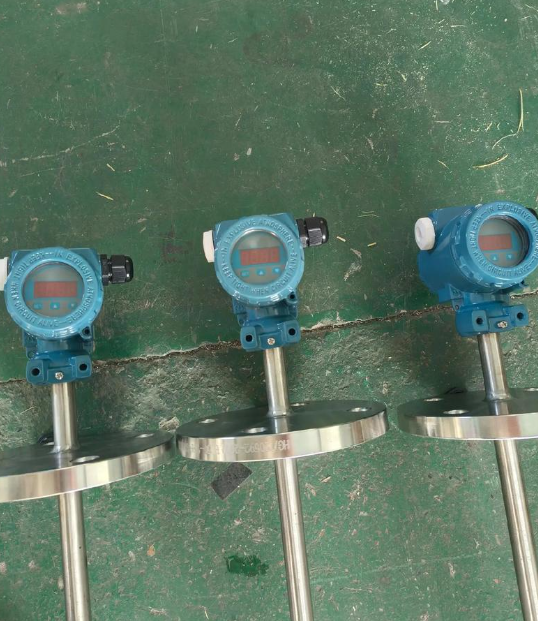

To better illustrate the effectiveness of our architecture, let us consider a specific use case. Imagine a scenario where our temperature monitoring instruments are deployed in remote locations. The data generated by these instruments needs to be sent to our servers for immediate analysis.

Using Kafka as the data pipe, the instruments send data packets in real-time. The data is then processed by Spark, which identifies any anomalies or trends using machine learning algorithms. The results are stored in DynamoDB, ensuring low-latency access for our analysts and managers.

The security provided by IAM ensures that only authorized personnel can access this sensitive data. The use of Docker and Kubernetes ensures that the system remains robust and scalable, even as the number of instruments in use grows.

This setup has proven to be highly effective. In 2025, we have seen a significant increase in the accuracy and speed of our data analysis. With less time spent on manual data handling and more time on strategic decision-making, our company has been able to innovate and expand its product lineup to meet the growing demands of the market.

In conclusion, the technical architecture that our professional instrument company has implemented showcases a perfect blend of cutting-edge technologies, strict security measures, and robust scalability. This design not only improves operational efficiency but also positions us to lead in our industry’s future technological advancements.